Fix #28157

Backport #28691

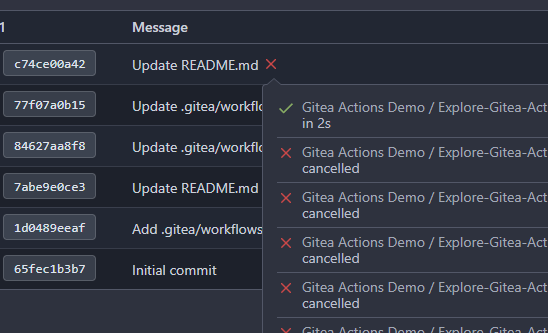

This PR fix the possible bugs about actions schedule.

- Move `UpdateRepositoryUnit` and `SetRepoDefaultBranch` from models to

service layer

- Remove schedules plan from database and cancel waiting & running

schedules tasks in this repository when actions unit has been disabled

or global disabled.

- Remove schedules plan from database and cancel waiting & running

schedules tasks in this repository when default branch changed.

Fix #28056

Backport #28361

This PR will check whether the repo has zero branch when pushing a

branch. If that, it means this repository hasn't been synced.

The reason caused that is after user upgrade from v1.20 -> v1.21, he

just push branches without visit the repository user interface. Because

all repositories routers will check whether a branches sync is necessary

but push has not such check.

For every repository, it has two states, synced or not synced. If there

is zero branch for a repository, then it will be assumed as non-sync

state. Otherwise, it's synced state. So if we think it's synced, we just

need to update branch/insert new branch. Otherwise do a full sync. So

that, for every push, there will be almost no extra load added. It's

high performance than yours.

For the implementation, we in fact will try to update the branch first,

if updated success with affect records > 0, then all are done. Because

that means the branch has been in the database. If no record is

affected, that means the branch does not exist in database. So there are

two possibilities. One is this is a new branch, then we just need to

insert the record. Another is the branches haven't been synced, then we

need to sync all the branches into database.

Backport #27265 by @JakobDev

Part of #27065

This PR touches functions used in templates. As templates are not static

typed, errors are harder to find, but I hope I catch it all. I think

some tests from other persons do not hurt.

Co-authored-by: JakobDev <jakobdev@gmx.de>

Related to #26239

This PR makes some fixes:

- do not show the prompt for mirror repos and repos with pull request

units disabled

- use `commit_time` instead of `updated_unix`, as `commit_time` is the

real time when the branch was pushed

In the original implementation, we can only get the first 30 records of

the commit status (the default paging size), if the commit status is

more than 30, it will lead to the bug #25990. I made the following two

changes.

- On the page, use the ` db.ListOptions{ListAll: true}` parameter

instead of `db.ListOptions{}`

- The `GetLatestCommitStatus` function makes a determination as to

whether or not a pager is being used.

fixed #25990

This PR

- Fix #26093. Replace `time.Time` with `timeutil.TimeStamp`

- Fix #26135. Add missing `xorm:"extends"` to `CountLFSMetaObject` for

LFS meta object query

- Add a unit test for LFS meta object garbage collection

Before:

After:

There's a bug in the recent logic, `CalcCommitStatus` will always return

the first item of `statuses` or error status, because `state` is defined

with default value which should be `CommitStatusSuccess`

Then

``` golang

if status.State.NoBetterThan(state) {

```

this `if` will always return false unless `status.State =

CommitStatusError` which makes no sense.

So `lastStatus` will always be `nil` or error status.

Then we will always return the first item of `statuses` here or only

return error status, and this is why in the first picture the commit

status is `Success` but not `Failure`.

af1ffbcd63/models/git/commit_status.go (L204-L211)

Co-authored-by: Giteabot <teabot@gitea.io>

Fix #25776. Close #25826.

In the discussion of #25776, @wolfogre's suggestion was to remove the

commit status of `running` and `warning` to keep it consistent with

github.

references:

-

https://docs.github.com/en/rest/commits/statuses?apiVersion=2022-11-28#about-commit-statuses

## ⚠️ BREAKING ⚠️

So the commit status of Gitea will be consistent with GitHub, only

`pending`, `success`, `error` and `failure`, while `warning` and

`running` are not supported anymore.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

This PR will display a pull request creation hint on the repository home

page when there are newly created branches with no pull request. Only

the recent 6 hours and 2 updated branches will be displayed.

Inspired by #14003

Replace #14003

Resolves #311

Resolves #13196

Resolves #23743

co-authored by @kolaente

When branch's commit CommitMessage is too long, the column maybe too

short.(TEXT 16K for mysql).

This PR will fix it to only store the summary because these message will

only show on branch list or possible future search?

Related #14180

Related #25233

Related #22639

Close #19786

Related #12763

This PR will change all the branches retrieve method from reading git

data to read database to reduce git read operations.

- [x] Sync git branches information into database when push git data

- [x] Create a new table `Branch`, merge some columns of `DeletedBranch`

into `Branch` table and drop the table `DeletedBranch`.

- [x] Read `Branch` table when visit `code` -> `branch` page

- [x] Read `Branch` table when list branch names in `code` page dropdown

- [x] Read `Branch` table when list git ref compare page

- [x] Provide a button in admin page to manually sync all branches.

- [x] Sync branches if repository is not empty but database branches are

empty when visiting pages with branches list

- [x] Use `commit_time desc` as the default FindBranch order by to keep

consistent as before and deleted branches will be always at the end.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Since #23493 has conflicts with latest commits, this PR is my proposal

for fixing #23371

Details are in the comments

And refactor the `modules/options` module, to make it always use

"filepath" to access local files.

Benefits:

* No need to do `util.CleanPath(strings.ReplaceAll(p, "\\", "/"))),

"/")` any more (not only one before)

* The function behaviors are clearly defined

Replace #23350.

Refactor `setting.Database.UseMySQL` to

`setting.Database.Type.IsMySQL()`.

To avoid mismatching between `Type` and `UseXXX`.

This refactor can fix the bug mentioned in #23350, so it should be

backported.

To avoid duplicated load of the same data in an HTTP request, we can set

a context cache to do that. i.e. Some pages may load a user from a

database with the same id in different areas on the same page. But the

code is hidden in two different deep logic. How should we share the

user? As a result of this PR, now if both entry functions accept

`context.Context` as the first parameter and we just need to refactor

`GetUserByID` to reuse the user from the context cache. Then it will not

be loaded twice on an HTTP request.

But of course, sometimes we would like to reload an object from the

database, that's why `RemoveContextData` is also exposed.

The core context cache is here. It defines a new context

```go

type cacheContext struct {

ctx context.Context

data map[any]map[any]any

lock sync.RWMutex

}

var cacheContextKey = struct{}{}

func WithCacheContext(ctx context.Context) context.Context {

return context.WithValue(ctx, cacheContextKey, &cacheContext{

ctx: ctx,

data: make(map[any]map[any]any),

})

}

```

Then you can use the below 4 methods to read/write/del the data within

the same context.

```go

func GetContextData(ctx context.Context, tp, key any) any

func SetContextData(ctx context.Context, tp, key, value any)

func RemoveContextData(ctx context.Context, tp, key any)

func GetWithContextCache[T any](ctx context.Context, cacheGroupKey string, cacheTargetID any, f func() (T, error)) (T, error)

```

Then let's take a look at how `system.GetString` implement it.

```go

func GetSetting(ctx context.Context, key string) (string, error) {

return cache.GetWithContextCache(ctx, contextCacheKey, key, func() (string, error) {

return cache.GetString(genSettingCacheKey(key), func() (string, error) {

res, err := GetSettingNoCache(ctx, key)

if err != nil {

return "", err

}

return res.SettingValue, nil

})

})

}

```

First, it will check if context data include the setting object with the

key. If not, it will query from the global cache which may be memory or

a Redis cache. If not, it will get the object from the database. In the

end, if the object gets from the global cache or database, it will be

set into the context cache.

An object stored in the context cache will only be destroyed after the

context disappeared.

This PR adds a task to the cron service to allow garbage collection of

LFS meta objects. As repositories may have a large number of

LFSMetaObjects, an updated column is added to this table and it is used

to perform a generational GC to attempt to reduce the amount of work.

(There may need to be a bit more work here but this is probably enough

for the moment.)

Fix #7045

Signed-off-by: Andrew Thornton <art27@cantab.net>

This PR introduce glob match for protected branch name. The separator is

`/` and you can use `*` matching non-separator chars and use `**` across

separator.

It also supports input an exist or non-exist branch name as matching

condition and branch name condition has high priority than glob rule.

Should fix #2529 and #15705

screenshots

<img width="1160" alt="image"

src="https://user-images.githubusercontent.com/81045/205651179-ebb5492a-4ade-4bb4-a13c-965e8c927063.png">

Co-authored-by: zeripath <art27@cantab.net>

- Move the file `compare.go` and `slice.go` to `slice.go`.

- Fix `ExistsInSlice`, it's buggy

- It uses `sort.Search`, so it assumes that the input slice is sorted.

- It passes `func(i int) bool { return slice[i] == target })` to

`sort.Search`, that's incorrect, check the doc of `sort.Search`.

- Conbine `IsInt64InSlice(int64, []int64)` and `ExistsInSlice(string,

[]string)` to `SliceContains[T]([]T, T)`.

- Conbine `IsSliceInt64Eq([]int64, []int64)` and `IsEqualSlice([]string,

[]string)` to `SliceSortedEqual[T]([]T, T)`.

- Add `SliceEqual[T]([]T, T)` as a distinction from

`SliceSortedEqual[T]([]T, T)`.

- Redesign `RemoveIDFromList([]int64, int64) ([]int64, bool)` to

`SliceRemoveAll[T]([]T, T) []T`.

- Add `SliceContainsFunc[T]([]T, func(T) bool)` and

`SliceRemoveAllFunc[T]([]T, func(T) bool)` for general use.

- Add comments to explain why not `golang.org/x/exp/slices`.

- Add unit tests.

After #22362, we can feel free to use transactions without

`db.DefaultContext`.

And there are still lots of models using `db.DefaultContext`, I think we

should refactor them carefully and one by one.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The recent PR adding orphaned checks to the LFS storage is not

sufficient to completely GC LFS, as it is possible for LFSMetaObjects to

remain associated with repos but still need to be garbage collected.

Imagine a situation where a branch is uploaded containing LFS files but

that branch is later completely deleted. The LFSMetaObjects will remain

associated with the Repository but the Repository will no longer contain

any pointers to the object.

This PR adds a second doctor command to perform a full GC.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Change all license headers to comply with REUSE specification.

Fix #16132

Co-authored-by: flynnnnnnnnnn <flynnnnnnnnnn@github>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

This PR adds a context parameter to a bunch of methods. Some helper

`xxxCtx()` methods got replaced with the normal name now.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>