Fix #28056

This PR will check whether the repo has zero branch when pushing a

branch. If that, it means this repository hasn't been synced.

The reason caused that is after user upgrade from v1.20 -> v1.21, he

just push branches without visit the repository user interface. Because

all repositories routers will check whether a branches sync is necessary

but push has not such check.

For every repository, it has two states, synced or not synced. If there

is zero branch for a repository, then it will be assumed as non-sync

state. Otherwise, it's synced state. So if we think it's synced, we just

need to update branch/insert new branch. Otherwise do a full sync. So

that, for every push, there will be almost no extra load added. It's

high performance than yours.

For the implementation, we in fact will try to update the branch first,

if updated success with affect records > 0, then all are done. Because

that means the branch has been in the database. If no record is

affected, that means the branch does not exist in database. So there are

two possibilities. One is this is a new branch, then we just need to

insert the record. Another is the branches haven't been synced, then we

need to sync all the branches into database.

The function `GetByBean` has an obvious defect that when the fields are

empty values, it will be ignored. Then users will get a wrong result

which is possibly used to make a security problem.

To avoid the possibility, this PR removed function `GetByBean` and all

references.

And some new generic functions have been introduced to be used.

The recommand usage like below.

```go

// if query an object according id

obj, err := db.GetByID[Object](ctx, id)

// query with other conditions

obj, err := db.Get[Object](ctx, builder.Eq{"a": a, "b":b})

```

It will fix #28268 .

<img width="1313" alt="image"

src="https://github.com/go-gitea/gitea/assets/9418365/cb1e07d5-7a12-4691-a054-8278ba255bfc">

<img width="1318" alt="image"

src="https://github.com/go-gitea/gitea/assets/9418365/4fd60820-97f1-4c2c-a233-d3671a5039e9">

## ⚠️ BREAKING ⚠️

But need to give up some features:

<img width="1312" alt="image"

src="https://github.com/go-gitea/gitea/assets/9418365/281c0d51-0e7d-473f-bbed-216e2f645610">

However, such abandonment may fix #28055 .

## Backgroud

When the user switches the dashboard context to an org, it means they

want to search issues in the repos that belong to the org. However, when

they switch to themselves, it means all repos they can access because

they may have created an issue in a public repo that they don't own.

<img width="286" alt="image"

src="https://github.com/go-gitea/gitea/assets/9418365/182dcd5b-1c20-4725-93af-96e8dfae5b97">

It's a confusing design. Think about this: What does "In your

repositories" mean when the user switches to an org? Repos belong to the

user or the org?

Whatever, it has been broken by #26012 and its following PRs. After the

PR, it searches for issues in repos that the dashboard context user owns

or has been explicitly granted access to, so it causes #28268.

## How to fix it

It's not really difficult to fix it. Just extend the repo scope to

search issues when the dashboard context user is the doer. Since the

user may create issues or be mentioned in any public repo, we can just

set `AllPublic` to true, which is already supported by indexers. The DB

condition will also support it in this PR.

But the real difficulty is how to count the search results grouped by

repos. It's something like "search issues with this keyword and those

filters, and return the total number and the top results. **Then, group

all of them by repo and return the counts of each group.**"

<img width="314" alt="image"

src="https://github.com/go-gitea/gitea/assets/9418365/5206eb20-f8f5-49b9-b45a-1be2fcf679f4">

Before #26012, it was being done in the DB, but it caused the results to

be incomplete (see the description of #26012).

And to keep this, #26012 implement it in an inefficient way, just count

the issues by repo one by one, so it cannot work when `AllPublic` is

true because it's almost impossible to do this for all public repos.

1bfcdeef4c/modules/indexer/issues/indexer.go (L318-L338)

## Give up unnecessary features

We may can resovle `TODO: use "group by" of the indexer engines to

implement it`, I'm sure it can be done with Elasticsearch, but IIRC,

Bleve and Meilisearch don't support "group by".

And the real question is, does it worth it? Why should we need to know

the counts grouped by repos?

Let me show you my search dashboard on gitea.com.

<img width="1304" alt="image"

src="https://github.com/go-gitea/gitea/assets/9418365/2bca2d46-6c71-4de1-94cb-0c9af27c62ff">

I never think the long repo list helps anything.

And if we agree to abandon it, things will be much easier. That is this

PR.

## TODO

I know it's important to filter by repos when searching issues. However,

it shouldn't be the way we have it now. It could be implemented like

this.

<img width="1316" alt="image"

src="https://github.com/go-gitea/gitea/assets/9418365/99ee5f21-cbb5-4dfe-914d-cb796cb79fbe">

The indexers support it well now, but it requires some frontend work,

which I'm not good at. So, I think someone could help do that in another

PR and merge this one to fix the bug first.

Or please block this PR and help to complete it.

Finally, "Switch dashboard context" is also a design that needs

improvement. In my opinion, it can be accomplished by adding filtering

conditions instead of "switching".

When we pick up a job, all waiting jobs should firstly be ordered by

update time,

otherwise when there's a running job, if I rerun an older job, the older

job will run first, as it's id is smaller.

This resolves a problem I encountered while updating gitea from 1.20.4

to 1.21. For some reason (correct or otherwise) there are some values in

`repository.size` that are NULL in my gitea database which cause this

migration to fail due to the NOT NULL constraints.

Log snippet (excuse the escape characters)

```

ESC[36mgitea |ESC[0m 2023-12-04T03:52:28.573122395Z 2023/12/04 03:52:28 ...ations/migrations.go:641:Migrate() [I] Migration[263]: Add git_size and lfs_size columns to repository table

ESC[36mgitea |ESC[0m 2023-12-04T03:52:28.608705544Z 2023/12/04 03:52:28 routers/common/db.go:36:InitDBEngine() [E] ORM engine initialization attempt #3/10 failed. Error: migrate: migration[263]: Add git_size and lfs_size columns to repository table failed: NOT NULL constraint failed: repository.git_size

```

I assume this should be reasonably safe since `repository.git_size` has

a default value of 0 but I don't know if that value being 0 in the odd

situation where `repository.size == NULL` has any problematic

consequences.

- Currently the repository description uses the same sanitizer as a

normal markdown document. This means that element such as heading and

images are allowed and can be abused.

- Create a minimal restricted sanitizer for the repository description,

which only allows what the postprocessor currently allows, which are

links and emojis.

- Added unit testing.

- Resolves https://codeberg.org/forgejo/forgejo/issues/1202

- Resolves https://codeberg.org/Codeberg/Community/issues/1122

(cherry picked from commit 631c87cc23)

Co-authored-by: Gusted <postmaster@gusted.xyz>

Changed behavior to calculate package quota limit using package `creator

ID` instead of `owner ID`.

Currently, users are allowed to create an unlimited number of

organizations, each of which has its own package limit quota, resulting

in the ability for users to have unlimited package space in different

organization scopes. This fix will calculate package quota based on

`package version creator ID` instead of `package version owner ID`

(which might be organization), so that users are not allowed to take

more space than configured package settings.

Also, there is a side case in which users can publish packages to a

specific package version, initially published by different user, taking

that user package size quota. Version in fix should be better because

the total amount of space is limited to the quota for users sharing the

same organization scope.

System users (Ghost, ActionsUser, etc) have a negative id and may be the

author of a comment, either because it was created by a now deleted user

or via an action using a transient token.

The GetPossibleUserByID function has special cases related to system

users and will not fail if given a negative id.

Refs: https://codeberg.org/forgejo/forgejo/issues/1425

(cherry picked from commit 6a2d2fa243)

Fixes https://codeberg.org/forgejo/forgejo/issues/1458

Some mails such as issue creation mails are missing the reply-to-comment

address. This PR fixes that and specifies which comment types should get

a reply-possibility.

## Bug in Gitea

I ran into this bug when I accidentally used the wrong redirect URL for

the oauth2 provider when using mssql. But the oauth2 provider still got

added.

Most of the time, we use `Delete(&some{id: some.id})` or

`In(condition).Delete(&some{})`, which specify the conditions. But the

function uses `Delete(source)` when `source.Cfg` is a `TEXT` field and

not empty. This will cause xorm `Delete` function not working in mssql.

61ff91f960/models/auth/source.go (L234-L240)

## Reason

Because the `TEXT` field can not be compared in mssql, xorm doesn't

support it according to [this

PR](https://gitea.com/xorm/xorm/pulls/2062)

[related

code](b23798dc98/internal/statements/statement.go (L552-L558))

in xorm

```go

if statement.dialect.URI().DBType == schemas.MSSQL && (col.SQLType.Name == schemas.Text ||

col.SQLType.IsBlob() || col.SQLType.Name == schemas.TimeStampz) {

if utils.IsValueZero(fieldValue) {

continue

}

return nil, fmt.Errorf("column %s is a TEXT type with data %#v which cannot be as compare condition", col.Name, fieldValue.Interface())

}

}

```

When using the `Delete` function in xorm, the non-empty fields will

auto-set as conditions(perhaps some special fields are not?). If `TEXT`

field is not empty, xorm will return an error. I only found this usage

after searching, but maybe there is something I missing.

---------

Co-authored-by: delvh <dev.lh@web.de>

- On user deletion, delete action runners that the user has created.

- Add a database consistency check to remove action runners that have

nonexistent belonging owner.

- Resolves https://codeberg.org/forgejo/forgejo/issues/1720

(cherry picked from commit 009ca7223d)

Co-authored-by: Gusted <postmaster@gusted.xyz>

The steps to reproduce it.

First, create a new oauth2 source.

Then, a user login with this oauth2 source.

Disable the oauth2 source.

Visit users -> settings -> security, 500 will be displayed.

This is because this page only load active Oauth2 sources but not all

Oauth2 sources.

See https://github.com/go-gitea/gitea/pull/27718#issuecomment-1773743014

. Add a test to ensure its behavior.

Why this test uses `ProjectBoardID=0`? Because in `SearchOptions`,

`ProjectBoardID=0` means what it is. But in `IssueOptions`,

`ProjectBoardID=0` means there is no condition, and

`ProjectBoardID=db.NoConditionID` means the board ID = 0.

It's really confusing. Probably it's better to separate the db search

engine and the other issue search code. It's really two different

systems. As far as I can see, `IssueOptions` is not necessary for most

of the code, which has very simple issue search conditions.

1. remove unused function `MoveIssueAcrossProjectBoards`

2. extract the project board condition into a function

3. use db.NoCondition instead of -1. (BTW, the usage of db.NoCondition

is too confusing. Is there any way to avoid that?)

4. remove the unnecessary comment since the ctx refactor is completed.

5. Change `b.ID != 0` to `b.ID > 0`. It's more intuitive but I think

they're the same since board ID can't be negative.

Closes #27455

> The mechanism responsible for long-term authentication (the 'remember

me' cookie) uses a weak construction technique. It will hash the user's

hashed password and the rands value; it will then call the secure cookie

code, which will encrypt the user's name with the computed hash. If one

were able to dump the database, they could extract those two values to

rebuild that cookie and impersonate a user. That vulnerability exists

from the date the dump was obtained until a user changed their password.

>

> To fix this security issue, the cookie could be created and verified

using a different technique such as the one explained at

https://paragonie.com/blog/2015/04/secure-authentication-php-with-long-term-persistence#secure-remember-me-cookies.

The PR removes the now obsolete setting `COOKIE_USERNAME`.

assert.Fail() will continue to execute the code while assert.FailNow()

not. I thought those uses of assert.Fail() should exit immediately.

PS: perhaps it's a good idea to use

[require](https://pkg.go.dev/github.com/stretchr/testify/require)

somewhere because the assert package's default behavior does not exit

when an error occurs, which makes it difficult to find the root error

reason.

Part of https://github.com/go-gitea/gitea/issues/27097:

- `gitea` theme is renamed to `gitea-light`

- `arc-green` theme is renamed to `gitea-dark`

- `auto` theme is renamed to `gitea-auto`

I put both themes in separate CSS files, removing all colors from the

base CSS. Existing users will be migrated to the new theme names. The

dark theme recolor will follow in a separate PR.

## ⚠️ BREAKING ⚠️

1. If there are existing custom themes with the names `gitea-light` or

`gitea-dark`, rename them before this upgrade and update the `theme`

column in the `user` table for each affected user.

2. The theme in `<html>` has moved from `class="theme-name"` to

`data-theme="name"`, existing customizations that depend on should be

updated.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Giteabot <teabot@gitea.io>

This PR reduces the complexity of the system setting system.

It only needs one line to introduce a new option, and the option can be

used anywhere out-of-box.

It is still high-performant (and more performant) because the config

values are cached in the config system.

Part of #27065

This PR touches functions used in templates. As templates are not static

typed, errors are harder to find, but I hope I catch it all. I think

some tests from other persons do not hurt.

This PR removed `unittest.MainTest` the second parameter

`TestOptions.GiteaRoot`. Now it detects the root directory by current

working directory.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Partially Fix #25041

This PR redefined the meaning of column `is_active` in table

`action_runner_token`.

Before this PR, `is_active` means whether it has been used by any

runner. If it's true, other runner cannot use it to register again.

In this PR, `is_active` means whether it's validated to be used to

register runner. And if it's true, then it can be used to register

runners until it become false. When creating a new `is_active` register

token, any previous tokens will be set `is_active` to false.

This fixes a performance bottleneck. It was discovered by Codeberg.

Every where query on that table (which has grown big over time) uses

this column, but there is no index on it.

See this part of the log which was posted on Matrix:

```

2023/09/10 00:52:01 ...rs/web/repo/issue.go:1446:ViewIssue() [W] [Slow SQL Query] UPDATE `issue_user` SET is_read=? WHERE uid=? AND issue_id=? [true x y] - 51.395434887s

2023/09/10 00:52:01 ...rs/web/repo/issue.go:1447:ViewIssue() [E] ReadBy: Error 1205 (HY000): Lock wait timeout exceeded; try restarting transaction

2023/09/10 00:52:01 ...eb/routing/logger.go:102:func1() [I] router: completed GET /Codeberg/Community/issues/1201 for [::ffff:xxx]:0, 500 Internal Server Error in 52384.2ms @ repo/issue.go:1256(repo.ViewIssue)

```

Fix the bug on try.gitea.io

```log

2023/09/18 01:48:41 ...ations/migrations.go:635:Migrate() [I] Migration[276]: Add RemoteAddress to mirrors

2023/09/18 01:48:41 routers/common/db.go:34:InitDBEngine() [E] ORM engine initialization attempt #7/10 failed. Error: migrate: migration[276]: Add RemoteAddress to mirrors failed: exit status 128 - fatal: not a git repository (or any parent up to mount point /)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

- fatal: not a git repository (or any parent up to mount point /)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

```

Caused by #26952

---------

Co-authored-by: Jason Song <i@wolfogre.com>

This PR adds a new field `RemoteAddress` to both mirror types which

contains the sanitized remote address for easier (database) access to

that information. Will be used in the audit PR if merged.

Part of #27065

This reduces the usage of `db.DefaultContext`. I think I've got enough

files for the first PR. When this is merged, I will continue working on

this.

Considering how many files this PR affect, I hope it won't take to long

to merge, so I don't end up in the merge conflict hell.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Since the issue indexer has been refactored, the issue overview webpage

is built by the `buildIssueOverview` function and underlying

`indexer.Search` function and `GetIssueStats` instead of

`GetUserIssueStats`. So the function is no longer used.

I moved the relevant tests to `indexer_test.go` and since the search

option changed from `IssueOptions` to `SearchOptions`, most of the tests

are useless now.

We need more tests about the db indexer because those tests are highly

connected with the issue overview webpage and now this page has several

bugs.

Any advice about those test cases is appreciated.

---------

Co-authored-by: CaiCandong <50507092+CaiCandong@users.noreply.github.com>

Fix #26723

Add `ChangeDefaultBranch` to the `notifier` interface and implement it

in `indexerNotifier`. So when changing the default branch,

`indexerNotifier` sends a message to the `indexer queue` to update the

index.

---------

Co-authored-by: techknowlogick <matti@mdranta.net>

Unfortunately, when a system setting hasn't been stored in the database,

it cannot be cached.

Meanwhile, this PR also uses context cache for push email avatar display

which should avoid to read user table via email address again and again.

According to my local test, this should reduce dashboard elapsed time

from 150ms -> 80ms .

Currently, Artifact does not have an expiration and automatic cleanup

mechanism, and this feature needs to be added. It contains the following

key points:

- [x] add global artifact retention days option in config file. Default

value is 90 days.

- [x] add cron task to clean up expired artifacts. It should run once a

day.

- [x] support custom retention period from `retention-days: 5` in

`upload-artifact@v3`.

- [x] artifacts link in actions view should be non-clickable text when

expired.

> ### Description

> If a new branch is pushed, and the repository has a rule that would

require signed commits for the new branch, the commit is rejected with a

500 error regardless of whether it's signed.

>

> When pushing a new branch, the "old" commit is the empty ID

(0000000000000000000000000000000000000000). verifyCommits has no

provision for this and passes an invalid commit range to git rev-list.

Prior to 1.19 this wasn't an issue because only pre-existing individual

branches could be protected.

>

> I was able to reproduce with

[try.gitea.io/CraigTest/test](https://try.gitea.io/CraigTest/test),

which is set up with a blanket rule to require commits on all branches.

Fix #25565

Very thanks to @Craig-Holmquist-NTI for reporting the bug and suggesting

an valid solution!

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

spec:

https://docs.github.com/en/rest/actions/secrets?apiVersion=2022-11-28#create-or-update-a-repository-secret

- Add a new route for creating or updating a secret value in a

repository

- Create a new file `routers/api/v1/repo/action.go` with the

implementation of the `CreateOrUpdateSecret` function

- Update the Swagger documentation for the `updateRepoSecret` operation

in the `v1_json.tmpl` template file

---------

Signed-off-by: Bo-Yi Wu <appleboy.tw@gmail.com>

Co-authored-by: Giteabot <teabot@gitea.io>

In PR #26786, the Go version for golangci-lint is bumped to 1.21. This

causes the following error:

```

models/migrations/v1_16/v210.go:132:23: SA1019: elliptic.Marshal has been deprecated since Go 1.21: for ECDH, use the crypto/ecdh package. This function returns an encoding equivalent to that of PublicKey.Bytes in crypto/ecdh. (staticcheck)

PublicKey: elliptic.Marshal(elliptic.P256(), parsed.PubKey.X, parsed.PubKey.Y),

```

The change now uses [func (*PublicKey)

ECDH](https://pkg.go.dev/crypto/ecdsa#PublicKey.ECDH), which is added in

Go 1.20.

- Resolves https://codeberg.org/forgejo/forgejo/issues/580

- Return a `upload_field` to any release API response, which points to

the API URL for uploading new assets.

- Adds unit test.

- Adds integration testing to verify URL is returned correctly and that

upload endpoint actually works

---------

Co-authored-by: Gusted <postmaster@gusted.xyz>

Replace #22751

1. only support the default branch in the repository setting.

2. autoload schedule data from the schedule table after starting the

service.

3. support specific syntax like `@yearly`, `@monthly`, `@weekly`,

`@daily`, `@hourly`

## How to use

See the [GitHub Actions

document](https://docs.github.com/en/actions/using-workflows/events-that-trigger-workflows#schedule)

for getting more detailed information.

```yaml

on:

schedule:

- cron: '30 5 * * 1,3'

- cron: '30 5 * * 2,4'

jobs:

test_schedule:

runs-on: ubuntu-latest

steps:

- name: Not on Monday or Wednesday

if: github.event.schedule != '30 5 * * 1,3'

run: echo "This step will be skipped on Monday and Wednesday"

- name: Every time

run: echo "This step will always run"

```

Signed-off-by: Bo-Yi.Wu <appleboy.tw@gmail.com>

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

This PR has multiple parts, and I didn't split them because

it's not easy to test them separately since they are all about the

dashboard page for issues.

1. Support counting issues via indexer to fix #26361

2. Fix repo selection so it also fixes #26653

3. Keep keywords in filter links.

The first two are regressions of #26012.

After:

https://github.com/go-gitea/gitea/assets/9418365/71dfea7e-d9e2-42b6-851a-cc081435c946

Thanks to @CaiCandong for helping with some tests.

- Add a new function `CountOrgSecrets` in the file

`models/secret/secret.go`

- Add a new file `modules/structs/secret.go`

- Add a new function `ListActionsSecrets` in the file

`routers/api/v1/api.go`

- Add a new file `routers/api/v1/org/action.go`

- Add a new function `listActionsSecrets` in the file

`routers/api/v1/org/action.go`

go-sdk: https://gitea.com/gitea/go-sdk/pulls/629

---------

Signed-off-by: Bo-Yi Wu <appleboy.tw@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: techknowlogick <matti@mdranta.net>

Co-authored-by: Giteabot <teabot@gitea.io>

## Archived labels

This adds the structure to allow for archived labels.

Archived labels are, just like closed milestones or projects, a medium to hide information without deleting it.

It is especially useful if there are outdated labels that should no longer be used without deleting the label entirely.

## Changes

1. UI and API have been equipped with the support to mark a label as archived

2. The time when a label has been archived will be stored in the DB

## Outsourced for the future

There's no special handling for archived labels at the moment.

This will be done in the future.

## Screenshots

Part of https://github.com/go-gitea/gitea/issues/25237

---------

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fixes #25564

Fixes #23191

- Api v2 search endpoint should return only the latest version matching

the query

- Api v3 search endpoint should return `take` packages not package

versions

The xorm `Sync2` has already been deprecated in favor of `Sync`,

so let's do the same inside the Gitea codebase.

Command used to replace everything:

```sh

for i in $(ag Sync2 --files-with-matches); do vim $i -c ':%sno/Sync2/Sync/g' -c ':wq'; done

```

This PR refactors a bunch of projects-related code, mostly the

templates.

The following things were done:

- rename boards to columns in frontend code

- use the new `ctx.Locale.Tr` method

- cleanup template, remove useless newlines, classes, comments

- merge org-/user and repo level project template together

- move "new column" button into project toolbar

- move issue card (shared by projects and pinned issues) to shared

template, remove useless duplicated styles

- add search function to projects (to make the layout more similar to

milestones list where it is inherited from 😆)

- maybe more changes I forgot I've done 😆

Closes #24893

After:

---------

Co-authored-by: silverwind <me@silverwind.io>

Even if GetDisplayName() is normally preferred elsewhere, this change

provides more consistency, as usernames are also always being shown

when participating in a conversation taking place in an issue or

a pull request. This change makes conversations easier to follow, as

you would not have to have a mental association between someone's

username and someone's real name in order to follow what is happening.

This behavior matches GitHub's. Optimally, both the username and the

full name (if applicable) could be shown, but such an effort is a

much bigger task that needs to be thought out well.

Fix #26129

Replace #26258

This PR will introduce a transaction on creating pull request so that if

some step failed, it will rollback totally. And there will be no dirty

pull request exist.

---------

Co-authored-by: Giteabot <teabot@gitea.io>

This PR is an extended implementation of #25189 and builds upon the

proposal by @hickford in #25653, utilizing some ideas proposed

internally by @wxiaoguang.

Mainly, this PR consists of a mechanism to pre-register OAuth2

applications on startup, which can be enabled or disabled by modifying

the `[oauth2].DEFAULT_APPLICATIONS` parameter in app.ini. The OAuth2

applications registered this way are being marked as "locked" and

neither be deleted nor edited over UI to prevent confusing/unexpected

behavior. Instead, they're being removed if no longer enabled in config.

The implemented mechanism can also be used to pre-register other OAuth2

applications in the future, if wanted.

Co-authored-by: hickford <mirth.hickford@gmail.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

---------

Co-authored-by: M Hickford <mirth.hickford@gmail.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

- The permalink and 'Reference in New issue' URL of an renderable file

(those where you can see the source and a rendered version of it, such

as markdown) doesn't contain `?display=source`. This leads the issue

that the URL doesn't have any effect, as by default the rendered version

is shown and thus not the source.

- Add `?display=source` to the permalink URL and to 'Reference in New

Issue' if it's renderable file.

- Add integration testing.

Refs: https://codeberg.org/forgejo/forgejo/pulls/1088

Co-authored-by: Gusted <postmaster@gusted.xyz>

Co-authored-by: Giteabot <teabot@gitea.io>

I noticed that `issue_service.CreateComment` adds transaction operations

on `issues_model.CreateComment`, we can merge the two functions and we

can avoid calling each other's methods in the `services` layer.

Co-authored-by: Giteabot <teabot@gitea.io>

Related to #26239

This PR makes some fixes:

- do not show the prompt for mirror repos and repos with pull request

units disabled

- use `commit_time` instead of `updated_unix`, as `commit_time` is the

real time when the branch was pushed

Fix #24662.

Replace #24822 and #25708 (although it has been merged)

## Background

In the past, Gitea supported issue searching with a keyword and

conditions in a less efficient way. It worked by searching for issues

with the keyword and obtaining limited IDs (as it is heavy to get all)

on the indexer (bleve/elasticsearch/meilisearch), and then querying with

conditions on the database to find a subset of the found IDs. This is

why the results could be incomplete.

To solve this issue, we need to store all fields that could be used as

conditions in the indexer and support both keyword and additional

conditions when searching with the indexer.

## Major changes

- Redefine `IndexerData` to include all fields that could be used as

filter conditions.

- Refactor `Search(ctx context.Context, kw string, repoIDs []int64,

limit, start int, state string)` to `Search(ctx context.Context, options

*SearchOptions)`, so it supports more conditions now.

- Change the data type stored in `issueIndexerQueue`. Use

`IndexerMetadata` instead of `IndexerData` in case the data has been

updated while it is in the queue. This also reduces the storage size of

the queue.

- Enhance searching with Bleve/Elasticsearch/Meilisearch, make them

fully support `SearchOptions`. Also, update the data versions.

- Keep most logic of database indexer, but remove

`issues.SearchIssueIDsByKeyword` in `models` to avoid confusion where is

the entry point to search issues.

- Start a Meilisearch instance to test it in unit tests.

- Add unit tests with almost full coverage to test

Bleve/Elasticsearch/Meilisearch indexer.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

In the original implementation, we can only get the first 30 records of

the commit status (the default paging size), if the commit status is

more than 30, it will lead to the bug #25990. I made the following two

changes.

- On the page, use the ` db.ListOptions{ListAll: true}` parameter

instead of `db.ListOptions{}`

- The `GetLatestCommitStatus` function makes a determination as to

whether or not a pager is being used.

fixed #25990

The API should only return the real Mail of a User, if the caller is

logged in. The check do to this don't work. This PR fixes this. This not

really a security issue, but can lead to Spam.

---------

Co-authored-by: silverwind <me@silverwind.io>

Fix #25934

Add `ignoreGlobal` parameter to `reqUnitAccess` and only check global

disabled units when `ignoreGlobal` is true. So the org-level projects

and user-level projects won't be affected by global disabled

`repo.projects` unit.

Fixes #25918

The migration fails on MSSQL because xorm tries to update the primary

key column. xorm prevents this if the column is marked as auto

increment:

c622cdaf89/internal/statements/update.go (L38-L40)

I think it would be better if xorm would check for primary key columns

here because updating such columns is bad practice. It looks like if

that auto increment check should do the same.

fyi @lunny

This PR

- Fix #26093. Replace `time.Time` with `timeutil.TimeStamp`

- Fix #26135. Add missing `xorm:"extends"` to `CountLFSMetaObject` for

LFS meta object query

- Add a unit test for LFS meta object garbage collection

Before:

After:

There's a bug in the recent logic, `CalcCommitStatus` will always return

the first item of `statuses` or error status, because `state` is defined

with default value which should be `CommitStatusSuccess`

Then

``` golang

if status.State.NoBetterThan(state) {

```

this `if` will always return false unless `status.State =

CommitStatusError` which makes no sense.

So `lastStatus` will always be `nil` or error status.

Then we will always return the first item of `statuses` here or only

return error status, and this is why in the first picture the commit

status is `Success` but not `Failure`.

af1ffbcd63/models/git/commit_status.go (L204-L211)

Co-authored-by: Giteabot <teabot@gitea.io>

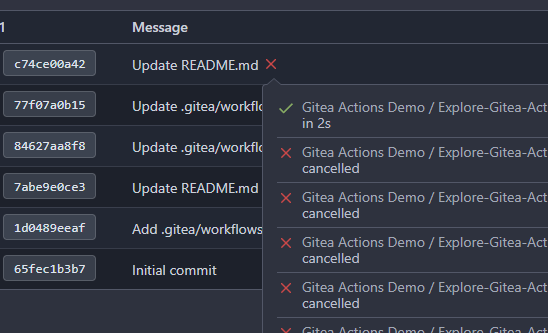

- cancel running jobs if the event is push

- Add a new function `CancelRunningJobs` to cancel all running jobs of a

run

- Update `FindRunOptions` struct to include `Ref` field and update its

condition in `toConds` function

- Implement auto cancellation of running jobs in the same workflow in

`notify` function

related task: https://github.com/go-gitea/gitea/pull/22751/

---------

Signed-off-by: Bo-Yi Wu <appleboy.tw@gmail.com>

Signed-off-by: appleboy <appleboy.tw@gmail.com>

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: delvh <dev.lh@web.de>

Close #24544

Changes:

- Create `action_tasks_version` table to store the latest version of

each scope (global, org and repo).

- When a job with the status of `waiting` is created, the tasks version

of the scopes it belongs to will increase.

- When the status of a job already in the database is updated to

`waiting`, the tasks version of the scopes it belongs to will increase.

- On Gitea side, in `FeatchTask()`, will try to query the

`action_tasks_version` record of the scope of the runner that call

`FetchTask()`. If the record does not exist, will insert a row. Then,

Gitea will compare the version passed from runner to Gitea with the

version in database, if inconsistent, try pick task. Gitea always

returns the latest version from database to the runner.

Related:

- Protocol: https://gitea.com/gitea/actions-proto-def/pulls/10

- Runner: https://gitea.com/gitea/act_runner/pulls/219

To avoid deadlock problem, almost database related functions should be

have ctx as the first parameter.

This PR do a refactor for some of these functions.

Fix #25776. Close #25826.

In the discussion of #25776, @wolfogre's suggestion was to remove the

commit status of `running` and `warning` to keep it consistent with

github.

references:

-

https://docs.github.com/en/rest/commits/statuses?apiVersion=2022-11-28#about-commit-statuses

## ⚠️ BREAKING ⚠️

So the commit status of Gitea will be consistent with GitHub, only

`pending`, `success`, `error` and `failure`, while `warning` and

`running` are not supported anymore.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

current actions artifacts implementation only support single file

artifact. To support multiple files uploading, it needs:

- save each file to each db record with same run-id, same artifact-name

and proper artifact-path

- need change artifact uploading url without artifact-id, multiple files

creates multiple artifact-ids

- support `path` in download-artifact action. artifact should download

to `{path}/{artifact-path}`.

- in repo action view, it provides zip download link in artifacts list

in summary page, no matter this artifact contains single or multiple

files.

Before:

After:

This issue comes from the change in #25468.

`LoadProject` will always return at least one record, so we use

`ProjectID` to check whether an issue is linked to a project in the old

code.

As other `issue.LoadXXX` functions, we need to check the return value

from `xorm.Session.Get`.

In recent unit tests, we only test `issueList.LoadAttributes()` but

don't test `issue.LoadAttributes()`. So I added a new test for

`issue.LoadAttributes()` in this PR.

---------

Co-authored-by: Denys Konovalov <privat@denyskon.de>

Fixes (?) #25538

Fixes https://codeberg.org/forgejo/forgejo/issues/972

Regression #23879#23879 introduced a change which prevents read access to packages if a

user is not a member of an organization.

That PR also contained a change which disallows package access if the

team unit is configured with "no access" for packages. I don't think

this change makes sense (at the moment). It may be relevant for private

orgs. But for public or limited orgs that's useless because an

unauthorized user would have more access rights than the team member.

This PR restores the old behaviour "If a user has read access for an

owner, they can read packages".

---------

Co-authored-by: Giteabot <teabot@gitea.io>

related #16865

This PR adds an accessibility check before mounting container blobs.

---------

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Co-authored-by: silverwind <me@silverwind.io>

This PR will display a pull request creation hint on the repository home

page when there are newly created branches with no pull request. Only

the recent 6 hours and 2 updated branches will be displayed.

Inspired by #14003

Replace #14003

Resolves #311

Resolves #13196

Resolves #23743

co-authored by @kolaente

Add a few extra test cases and test functions for the collaboration

model to get everything covered by tests (except for error handling, as

we cannot suddenly mock errors from the database).

Co-authored-by: Gusted <postmaster@gusted.xyz>

Reviewed-on: https://codeberg.org/forgejo/forgejo/pulls/825

Co-authored-by: Gusted <gusted@noreply.codeberg.org>

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

Fix #25558

Extract from #22743

This PR added a repository's check when creating/deleting branches via

API. Mirror repository and archive repository cannot do that.

When branch's commit CommitMessage is too long, the column maybe too

short.(TEXT 16K for mysql).

This PR will fix it to only store the summary because these message will

only show on branch list or possible future search?

Related #14180

Related #25233

Related #22639

Close #19786

Related #12763

This PR will change all the branches retrieve method from reading git

data to read database to reduce git read operations.

- [x] Sync git branches information into database when push git data

- [x] Create a new table `Branch`, merge some columns of `DeletedBranch`

into `Branch` table and drop the table `DeletedBranch`.

- [x] Read `Branch` table when visit `code` -> `branch` page

- [x] Read `Branch` table when list branch names in `code` page dropdown

- [x] Read `Branch` table when list git ref compare page

- [x] Provide a button in admin page to manually sync all branches.

- [x] Sync branches if repository is not empty but database branches are

empty when visiting pages with branches list

- [x] Use `commit_time desc` as the default FindBranch order by to keep

consistent as before and deleted branches will be always at the end.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

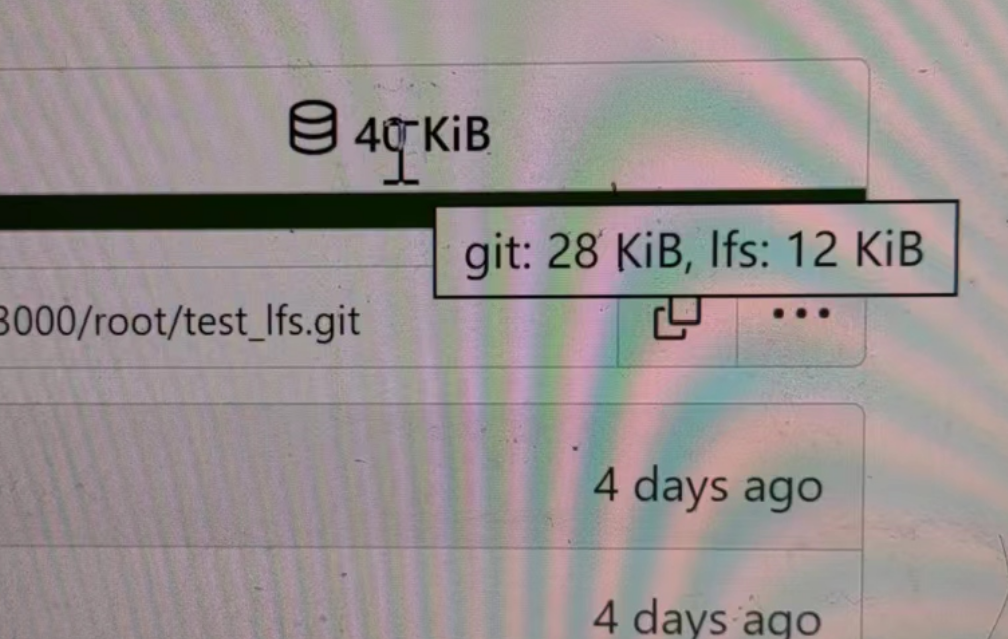

releated to #21820

- Split `Size` in repository table as two new colunms, one is `GitSize`

for git size, the other is `LFSSize` for lfs data. still store full size

in `Size` colunm.

- Show full size on ui, but show each of them by a `title`; example:

- Return full size in api response.

---------

Signed-off-by: a1012112796 <1012112796@qq.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: DmitryFrolovTri <23313323+DmitryFrolovTri@users.noreply.github.com>

Co-authored-by: Giteabot <teabot@gitea.io>

Fix #25451.

Bugfixes:

- When stopping the zombie or endless tasks, set `LogInStorage` to true

after transferring the file to storage. It was missing, it could write

to a nonexistent file in DBFS because `LogInStorage` was false.

- Always update `ActionTask.Updated` when there's a new state reported

by the runner, even if there's no change. This is to avoid the task

being judged as a zombie task.

Enhancement:

- Support `Stat()` for DBFS file.

- `WriteLogs` refuses to write if it could result in content holes.

---------

Co-authored-by: Giteabot <teabot@gitea.io>

Fix #25088

This PR adds the support for

[`pull_request_target`](https://docs.github.com/en/actions/using-workflows/events-that-trigger-workflows#pull_request_target)

workflow trigger. `pull_request_target` is similar to `pull_request`,

but the workflow triggered by the `pull_request_target` event runs in

the context of the base branch of the pull request rather than the head

branch. Since the workflow from the base is considered trusted, it can

access the secrets and doesn't need approvals to run.

The recent change on xorm for `Sync` is it will not warn when database

have columns which is not listed on struct. So we just need this warn

logs when `Sync` the whole database but not in the migrations Sync.

This PR will remove almost unnecessary warning logs on migrations.

Now below logs in CI will disappear.

```log

2023/06/23 17:51:32 models/db/engine.go:191:InitEngineWithMigration() [W] Table gtestschema.project has column creator_id but struct has not related field

2023/06/23 17:51:32 models/db/engine.go:191:InitEngineWithMigration() [W] Table gtestschema.project has column is_closed but struct has not related field

2023/06/23 17:51:32 models/db/engine.go:191:InitEngineWithMigration() [W] Table gtestschema.project has column board_type but struct has not related field

2023/06/23 17:51:32 models/db/engine.go:191:InitEngineWithMigration() [W] Table gtestschema.project has column type but struct has not related field

2023/06/23 17:51:32 models/db/engine.go:191:InitEngineWithMigration() [W] Table gtestschema.project has column closed_date_unix but struct has not related field

2023/06/23 17:51:32 models/db/engine.go:191:InitEngineWithMigration() [W] Table gtestschema.project has column created_unix but struct has not related field

2023/06/23 17:51:32 models/db/engine.go:191:InitEngineWithMigration() [W] Table gtestschema.project has column updated_unix but struct has not related field

2023/06/23 17:51:32 models/db/engine.go:191:InitEngineWithMigration() [W] Table gtestschema.project has column card_type but struct has not related field

```

this will allow us to fully localize it later

PS: we can not migrate back as the old value was a one-way conversion

prepare for #25213

---

*Sponsored by Kithara Software GmbH*

# The problem

There were many "path tricks":

* By default, Gitea uses its program directory as its work path

* Gitea tries to use the "work path" to guess its "custom path" and

"custom conf (app.ini)"

* Users might want to use other directories as work path

* The non-default work path should be passed to Gitea by GITEA_WORK_DIR

or "--work-path"

* But some Gitea processes are started without these values

* The "serv" process started by OpenSSH server

* The CLI sub-commands started by site admin

* The paths are guessed by SetCustomPathAndConf again and again

* The default values of "work path / custom path / custom conf" can be

changed when compiling

# The solution

* Use `InitWorkPathAndCommonConfig` to handle these path tricks, and use

test code to cover its behaviors.

* When Gitea's web server runs, write the WORK_PATH to "app.ini", this

value must be the most correct one, because if this value is not right,

users would find that the web UI doesn't work and then they should be

able to fix it.

* Then all other sub-commands can use the WORK_PATH in app.ini to

initialize their paths.

* By the way, when Gitea starts for git protocol, it shouldn't output

any log, otherwise the git protocol gets broken and client blocks

forever.

The "work path" priority is: WORK_PATH in app.ini > cmd arg --work-path

> env var GITEA_WORK_DIR > builtin default

The "app.ini" searching order is: cmd arg --config > cmd arg "work path

/ custom path" > env var "work path / custom path" > builtin default

## ⚠️ BREAKING

If your instance's "work path / custom path / custom conf" doesn't meet

the requirements (eg: work path must be absolute), Gitea will report a

fatal error and exit. You need to set these values according to the

error log.

----

Close #24818

Close #24222

Close #21606

Close #21498

Close #25107

Close #24981

Maybe close #24503

Replace #23301

Replace #22754

And maybe more

Follow up #22405

Fix #20703

This PR rewrites storage configuration read sequences with some breaks

and tests. It becomes more strict than before and also fixed some

inherit problems.

- Move storage's MinioConfig struct into setting, so after the

configuration loading, the values will be stored into the struct but not

still on some section.

- All storages configurations should be stored on one section,

configuration items cannot be overrided by multiple sections. The

prioioty of configuration is `[attachment]` > `[storage.attachments]` |

`[storage.customized]` > `[storage]` > `default`

- For extra override configuration items, currently are `SERVE_DIRECT`,

`MINIO_BASE_PATH`, `MINIO_BUCKET`, which could be configured in another

section. The prioioty of the override configuration is `[attachment]` >

`[storage.attachments]` > `default`.

- Add more tests for storages configurations.

- Update the storage documentations.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

close #24540

related:

- Protocol: https://gitea.com/gitea/actions-proto-def/pulls/9

- Runner side: https://gitea.com/gitea/act_runner/pulls/201

changes:

- Add column of `labels` to table `action_runner`, and combine the value

of `agent_labels` and `custom_labels` column to `labels` column.

- Store `labels` when registering `act_runner`.

- Update `labels` when `act_runner` starting and calling `Declare`.

- Users cannot modify the `custom labels` in edit page any more.

other changes:

- Store `version` when registering `act_runner`.

- If runner is latest version, parse version from `Declare`. But older

version runner still parse version from request header.

Enable deduplication of unofficial reviews. When pull requests are

configured to include all approvers, not just official ones, in the

default merge messages it was possible to generate duplicated

Reviewed-by lines for a single person. Add an option to find only

distinct reviews for a given query.

fixes #24795

---------

Signed-off-by: Cory Todd <cory.todd@canonical.com>

Co-authored-by: Giteabot <teabot@gitea.io>

## Changes

- Adds the following high level access scopes, each with `read` and

`write` levels:

- `activitypub`

- `admin` (hidden if user is not a site admin)

- `misc`

- `notification`

- `organization`

- `package`

- `issue`

- `repository`

- `user`

- Adds new middleware function `tokenRequiresScopes()` in addition to

`reqToken()`

- `tokenRequiresScopes()` is used for each high-level api section

- _if_ a scoped token is present, checks that the required scope is

included based on the section and HTTP method

- `reqToken()` is used for individual routes

- checks that required authentication is present (but does not check

scope levels as this will already have been handled by

`tokenRequiresScopes()`

- Adds migration to convert old scoped access tokens to the new set of

scopes

- Updates the user interface for scope selection

### User interface example

<img width="903" alt="Screen Shot 2023-05-31 at 1 56 55 PM"

src="https://github.com/go-gitea/gitea/assets/23248839/654766ec-2143-4f59-9037-3b51600e32f3">

<img width="917" alt="Screen Shot 2023-05-31 at 1 56 43 PM"

src="https://github.com/go-gitea/gitea/assets/23248839/1ad64081-012c-4a73-b393-66b30352654c">

## tokenRequiresScopes Design Decision

- `tokenRequiresScopes()` was added to more reliably cover api routes.

For an incoming request, this function uses the given scope category

(say `AccessTokenScopeCategoryOrganization`) and the HTTP method (say

`DELETE`) and verifies that any scoped tokens in use include

`delete:organization`.

- `reqToken()` is used to enforce auth for individual routes that

require it. If a scoped token is not present for a request,

`tokenRequiresScopes()` will not return an error

## TODO

- [x] Alphabetize scope categories

- [x] Change 'public repos only' to a radio button (private vs public).

Also expand this to organizations

- [X] Disable token creation if no scopes selected. Alternatively, show

warning

- [x] `reqToken()` is missing from many `POST/DELETE` routes in the api.

`tokenRequiresScopes()` only checks that a given token has the correct

scope, `reqToken()` must be used to check that a token (or some other

auth) is present.

- _This should be addressed in this PR_

- [x] The migration should be reviewed very carefully in order to

minimize access changes to existing user tokens.

- _This should be addressed in this PR_

- [x] Link to api to swagger documentation, clarify what

read/write/delete levels correspond to

- [x] Review cases where more than one scope is needed as this directly

deviates from the api definition.

- _This should be addressed in this PR_

- For example:

```go

m.Group("/users/{username}/orgs", func() {

m.Get("", reqToken(), org.ListUserOrgs)

m.Get("/{org}/permissions", reqToken(), org.GetUserOrgsPermissions)

}, tokenRequiresScopes(auth_model.AccessTokenScopeCategoryUser,

auth_model.AccessTokenScopeCategoryOrganization),

context_service.UserAssignmentAPI())

```

## Future improvements

- [ ] Add required scopes to swagger documentation

- [ ] Redesign `reqToken()` to be opt-out rather than opt-in

- [ ] Subdivide scopes like `repository`

- [ ] Once a token is created, if it has no scopes, we should display

text instead of an empty bullet point

- [ ] If the 'public repos only' option is selected, should read

categories be selected by default

Closes #24501

Closes #24799

Co-authored-by: Jonathan Tran <jon@allspice.io>

Co-authored-by: Kyle D <kdumontnu@gmail.com>

Co-authored-by: silverwind <me@silverwind.io>