As the docs of codeberg refer to the strings printed by the Forgejo

ssh servers, this is user-facing and is nice to update to the new

product name.

(cherry picked from commit 103991d73f)

(cherry picked from commit 2a0d3f85f1)

(cherry picked from commit eb2b4ce388)

(cherry picked from commit 0998b51716)

[BRANDING] forgejo log message

(cherry picked from commit d51a046ebe)

(cherry picked from commit d66e1c7b6e)

(cherry picked from commit b5bffe4ce8)

(cherry picked from commit 3fa776d856)

(cherry picked from commit 18d064f472)

(cherry picked from commit c95094e355)

(cherry picked from commit 5784290bc4)

(cherry picked from commit aee336886b)

(cherry picked from commit ec2f60b516)

# ⚠️ Breaking

Many deprecated queue config options are removed (actually, they should

have been removed in 1.18/1.19).

If you see the fatal message when starting Gitea: "Please update your

app.ini to remove deprecated config options", please follow the error

messages to remove these options from your app.ini.

Example:

```

2023/05/06 19:39:22 [E] Removed queue option: `[indexer].ISSUE_INDEXER_QUEUE_TYPE`. Use new options in `[queue.issue_indexer]`

2023/05/06 19:39:22 [E] Removed queue option: `[indexer].UPDATE_BUFFER_LEN`. Use new options in `[queue.issue_indexer]`

2023/05/06 19:39:22 [F] Please update your app.ini to remove deprecated config options

```

Many options in `[queue]` are are dropped, including:

`WRAP_IF_NECESSARY`, `MAX_ATTEMPTS`, `TIMEOUT`, `WORKERS`,

`BLOCK_TIMEOUT`, `BOOST_TIMEOUT`, `BOOST_WORKERS`, they can be removed

from app.ini.

# The problem

The old queue package has some legacy problems:

* complexity: I doubt few people could tell how it works.

* maintainability: Too many channels and mutex/cond are mixed together,

too many different structs/interfaces depends each other.

* stability: due to the complexity & maintainability, sometimes there

are strange bugs and difficult to debug, and some code doesn't have test

(indeed some code is difficult to test because a lot of things are mixed

together).

* general applicability: although it is called "queue", its behavior is

not a well-known queue.

* scalability: it doesn't seem easy to make it work with a cluster

without breaking its behaviors.

It came from some very old code to "avoid breaking", however, its

technical debt is too heavy now. It's a good time to introduce a better

"queue" package.

# The new queue package

It keeps using old config and concept as much as possible.

* It only contains two major kinds of concepts:

* The "base queue": channel, levelqueue, redis

* They have the same abstraction, the same interface, and they are

tested by the same testing code.

* The "WokerPoolQueue", it uses the "base queue" to provide "worker

pool" function, calls the "handler" to process the data in the base

queue.

* The new code doesn't do "PushBack"

* Think about a queue with many workers, the "PushBack" can't guarantee

the order for re-queued unhandled items, so in new code it just does

"normal push"

* The new code doesn't do "pause/resume"

* The "pause/resume" was designed to handle some handler's failure: eg:

document indexer (elasticsearch) is down

* If a queue is paused for long time, either the producers blocks or the

new items are dropped.

* The new code doesn't do such "pause/resume" trick, it's not a common

queue's behavior and it doesn't help much.

* If there are unhandled items, the "push" function just blocks for a

few seconds and then re-queue them and retry.

* The new code doesn't do "worker booster"

* Gitea's queue's handlers are light functions, the cost is only the

go-routine, so it doesn't make sense to "boost" them.

* The new code only use "max worker number" to limit the concurrent

workers.

* The new "Push" never blocks forever

* Instead of creating more and more blocking goroutines, return an error

is more friendly to the server and to the end user.

There are more details in code comments: eg: the "Flush" problem, the

strange "code.index" hanging problem, the "immediate" queue problem.

Almost ready for review.

TODO:

* [x] add some necessary comments during review

* [x] add some more tests if necessary

* [x] update documents and config options

* [x] test max worker / active worker

* [x] re-run the CI tasks to see whether any test is flaky

* [x] improve the `handleOldLengthConfiguration` to provide more

friendly messages

* [x] fine tune default config values (eg: length?)

## Code coverage:

Co-authored-by: @awkwardbunny

This PR adds a Debian package registry. You can follow [this

tutorial](https://www.baeldung.com/linux/create-debian-package) to build

a *.deb package for testing. Source packages are not supported at the

moment and I did not find documentation of the architecture "all" and

how these packages should be treated.

---------

Co-authored-by: Brian Hong <brian@hongs.me>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

The idea is to use a Layered Asset File-system (modules/assetfs/layered.go)

For example: when there are 2 layers: "custom", "builtin", when access

to asset "my/page.tmpl", the Layered Asset File-system will first try to

use "custom" assets, if not found, then use "builtin" assets.

This approach will hugely simplify a lot of code, make them testable.

Other changes:

* Simplify the AssetsHandlerFunc code

* Simplify the `gitea embedded` sub-command code

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Fixes: #19687

The --quiet options to gitea dump silences informational and less

important messages, but will still log warnings and errors to console.

Very useful in combination with cron backups and '-f -'.

Since --verbose and --quiet are incompatible with each other I made it

an error to specify both. To get the error message to be printed to

stderr I had to make this test after the NewServices()-call, which is

why there are three new blocks of code instead of two.

# Why this PR comes

At first, I'd like to help users like #23636 (there are a lot)

The unclear "Internal Server Error" is quite anonying, scare users,

frustrate contributors, nobody knows what happens.

So, it's always good to provide meaningful messages to end users (of

course, do not leak sensitive information).

When I started working on the "response message to end users", I found

that the related code has a lot of technical debt. A lot of copy&paste

code, unclear fields and usages.

So I think it's good to make everything clear.

# Tech Backgrounds

Gitea has many sub-commands, some are used by admins, some are used by

SSH servers or Git Hooks. Many sub-commands use "internal API" to

communicate with Gitea web server.

Before, Gitea server always use `StatusCode + Json "err" field` to

return messages.

* The CLI sub-commands: they expect to show all error related messages

to site admin

* The Serv/Hook sub-commands (for git clients): they could only show

safe messages to end users, the error log could only be recorded by

"SSHLog" to Gitea web server.

In the old design, it assumes that:

* If the StatusCode is 500 (in some functions), then the "err" field is

error log, shouldn't be exposed to git client.

* If the StatusCode is 40x, then the "err" field could be exposed. And

some functions always read the "err" no matter what the StatusCode is.

The old code is not strict, and it's difficult to distinguish the

messages clearly and then output them correctly.

# This PR

To help to remove duplicate code and make everything clear, this PR

introduces `ResponseExtra` and `requestJSONResp`.

* `ResponseExtra` is a struct which contains "extra" information of a

internal API response, including StatusCode, UserMsg, Error

* `requestJSONResp` is a generic function which can be used for all

cases to help to simplify the calls.

* Remove all `map["err"]`, always use `private.Response{Err}` to

construct error messages.

* User messages and error messages are separated clearly, the `fail` and

`handleCliResponseExtra` will output correct messages.

* Replace all `Internal Server Error` messages with meaningful (still

safe) messages.

This PR saves more than 300 lines, while makes the git client messages

more clear.

Many gitea-serv/git-hook related essential functions are covered by

tests.

---------

Co-authored-by: delvh <dev.lh@web.de>

Close #23622

As described in the issue, disabling the LFS/Package settings will cause

errors when running `gitea dump` or `gitea doctor`. We need to check the

settings and the related operations should be skipped if the settings

are disabled.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Creating pid file should not belong to setting package and only web

command needs that. So this PR moves pidfile creation from setting

package to web command package to keep setting package more readable.

I marked this as `break` because the PIDFile path moved. For those who

have used the pid build argument, it has to be changed.

---------

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Support to iterator subdirectory in ObjectStorage for

ObjectStorage.Iterator method.

It's required for https://github.com/go-gitea/gitea/pull/22738 to make

artifact files cleanable.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

When there is an error creating a new openIDConnect authentication

source try to handle the error a little better.

Close #23283

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Replace #23350.

Refactor `setting.Database.UseMySQL` to

`setting.Database.Type.IsMySQL()`.

To avoid mismatching between `Type` and `UseXXX`.

This refactor can fix the bug mentioned in #23350, so it should be

backported.

Close #23137

The old code is too old (8-9 years ago)

Let's try to execute the git commands from git bin home directly.

The verb has been checked above, it could only be:

* git-upload-pack

* git-upload-archive

* git-receive-pack

* git-lfs-authenticate

Some bugs caused by less unit tests in fundamental packages. This PR

refactor `setting` package so that create a unit test will be easier

than before.

- All `LoadFromXXX` files has been splited as two functions, one is

`InitProviderFromXXX` and `LoadCommonSettings`. The first functions will

only include the code to create or new a ini file. The second function

will load common settings.

- It also renames all functions in setting from `newXXXService` to

`loadXXXSetting` or `loadXXXFrom` to make the function name less

confusing.

- Move `XORMLog` to `SQLLog` because it's a better name for that.

Maybe we should finally move these `loadXXXSetting` into the `XXXInit`

function? Any idea?

---------

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: delvh <dev.lh@web.de>

This PR refactors and improves the password hashing code within gitea

and makes it possible for server administrators to set the password

hashing parameters

In addition it takes the opportunity to adjust the settings for `pbkdf2`

in order to make the hashing a little stronger.

The majority of this work was inspired by PR #14751 and I would like to

thank @boppy for their work on this.

Thanks to @gusted for the suggestion to adjust the `pbkdf2` hashing

parameters.

Close #14751

---------

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

To avoid duplicated load of the same data in an HTTP request, we can set

a context cache to do that. i.e. Some pages may load a user from a

database with the same id in different areas on the same page. But the

code is hidden in two different deep logic. How should we share the

user? As a result of this PR, now if both entry functions accept

`context.Context` as the first parameter and we just need to refactor

`GetUserByID` to reuse the user from the context cache. Then it will not

be loaded twice on an HTTP request.

But of course, sometimes we would like to reload an object from the

database, that's why `RemoveContextData` is also exposed.

The core context cache is here. It defines a new context

```go

type cacheContext struct {

ctx context.Context

data map[any]map[any]any

lock sync.RWMutex

}

var cacheContextKey = struct{}{}

func WithCacheContext(ctx context.Context) context.Context {

return context.WithValue(ctx, cacheContextKey, &cacheContext{

ctx: ctx,

data: make(map[any]map[any]any),

})

}

```

Then you can use the below 4 methods to read/write/del the data within

the same context.

```go

func GetContextData(ctx context.Context, tp, key any) any

func SetContextData(ctx context.Context, tp, key, value any)

func RemoveContextData(ctx context.Context, tp, key any)

func GetWithContextCache[T any](ctx context.Context, cacheGroupKey string, cacheTargetID any, f func() (T, error)) (T, error)

```

Then let's take a look at how `system.GetString` implement it.

```go

func GetSetting(ctx context.Context, key string) (string, error) {

return cache.GetWithContextCache(ctx, contextCacheKey, key, func() (string, error) {

return cache.GetString(genSettingCacheKey(key), func() (string, error) {

res, err := GetSettingNoCache(ctx, key)

if err != nil {

return "", err

}

return res.SettingValue, nil

})

})

}

```

First, it will check if context data include the setting object with the

key. If not, it will query from the global cache which may be memory or

a Redis cache. If not, it will get the object from the database. In the

end, if the object gets from the global cache or database, it will be

set into the context cache.

An object stored in the context cache will only be destroyed after the

context disappeared.

As part of administration sometimes it is appropriate to forcibly tell

users to update their passwords.

This PR creates a new command `gitea admin user must-change-password`

which will set the `MustChangePassword` flag on the provided users.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Fixes #19555

Test-Instructions:

https://github.com/go-gitea/gitea/pull/21441#issuecomment-1419438000

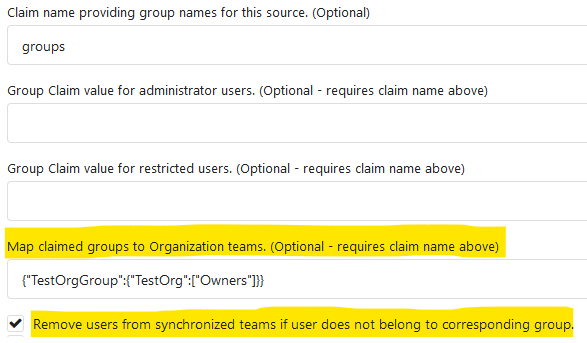

This PR implements the mapping of user groups provided by OIDC providers

to orgs teams in Gitea. The main part is a refactoring of the existing

LDAP code to make it usable from different providers.

Refactorings:

- Moved the router auth code from module to service because of import

cycles

- Changed some model methods to take a `Context` parameter

- Moved the mapping code from LDAP to a common location

I've tested it with Keycloak but other providers should work too. The

JSON mapping format is the same as for LDAP.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Followup for #20908 to allow setting the scopes when creating new access

token via CLI.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

- Move the file `compare.go` and `slice.go` to `slice.go`.

- Fix `ExistsInSlice`, it's buggy

- It uses `sort.Search`, so it assumes that the input slice is sorted.

- It passes `func(i int) bool { return slice[i] == target })` to

`sort.Search`, that's incorrect, check the doc of `sort.Search`.

- Conbine `IsInt64InSlice(int64, []int64)` and `ExistsInSlice(string,

[]string)` to `SliceContains[T]([]T, T)`.

- Conbine `IsSliceInt64Eq([]int64, []int64)` and `IsEqualSlice([]string,

[]string)` to `SliceSortedEqual[T]([]T, T)`.

- Add `SliceEqual[T]([]T, T)` as a distinction from

`SliceSortedEqual[T]([]T, T)`.

- Redesign `RemoveIDFromList([]int64, int64) ([]int64, bool)` to

`SliceRemoveAll[T]([]T, T) []T`.

- Add `SliceContainsFunc[T]([]T, func(T) bool)` and

`SliceRemoveAllFunc[T]([]T, func(T) bool)` for general use.

- Add comments to explain why not `golang.org/x/exp/slices`.

- Add unit tests.

Change all license headers to comply with REUSE specification.

Fix #16132

Co-authored-by: flynnnnnnnnnn <flynnnnnnnnnn@github>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

This PR adds a context parameter to a bunch of methods. Some helper

`xxxCtx()` methods got replaced with the normal name now.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The purpose of #18982 is to improve the SMTP mailer, but there were some

unrelated changes made to the SMTP auth in

d60c438694

This PR reverts these unrelated changes, fix #21744

Related #20471

This PR adds global quota limits for the package registry. Settings for

individual users/orgs can be added in a seperate PR using the settings

table.

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

closes #20683

Add an option to gitea dump to skip the bleve indexes, which can become

quite large (in my case the same size as the repo's) and can be

regenerated after restore.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: zeripath <art27@cantab.net>

Only load SECRET_KEY and INTERNAL_TOKEN if they exist.

Never write the config file if the keys do not exist, which was only a fallback for Gitea upgraded from < 1.5

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

<!--

Please check the following:

1. Make sure you are targeting the `main` branch, pull requests on

release branches are only allowed for bug fixes.

2. Read contributing guidelines:

https://github.com/go-gitea/gitea/blob/main/CONTRIBUTING.md

3. Describe what your pull request does and which issue you're targeting

(if any)

-->

I fixed typo.

A testing cleanup.

This pull request replaces `os.MkdirTemp` with `t.TempDir`. We can use the `T.TempDir` function from the `testing` package to create temporary directory. The directory created by `T.TempDir` is automatically removed when the test and all its subtests complete.

This saves us at least 2 lines (error check, and cleanup) on every instance, or in some cases adds cleanup that we forgot.

Reference: https://pkg.go.dev/testing#T.TempDir

```go

func TestFoo(t *testing.T) {

// before

tmpDir, err := os.MkdirTemp("", "")

require.NoError(t, err)

defer os.RemoveAll(tmpDir)

// now

tmpDir := t.TempDir()

}

```

Signed-off-by: Eng Zer Jun <engzerjun@gmail.com>

* fix hard-coded timeout and error panic in API archive download endpoint

This commit updates the `GET /api/v1/repos/{owner}/{repo}/archive/{archive}`

endpoint which prior to this PR had a couple of issues.

1. The endpoint had a hard-coded 20s timeout for the archiver to complete after

which a 500 (Internal Server Error) was returned to client. For a scripted

API client there was no clear way of telling that the operation timed out and

that it should retry.

2. Whenever the timeout _did occur_, the code used to panic. This was caused by

the API endpoint "delegating" to the same call path as the web, which uses a

slightly different way of reporting errors (HTML rather than JSON for

example).

More specifically, `api/v1/repo/file.go#GetArchive` just called through to

`web/repo/repo.go#Download`, which expects the `Context` to have a `Render`

field set, but which is `nil` for API calls. Hence, a `nil` pointer error.

The code addresses (1) by dropping the hard-coded timeout. Instead, any

timeout/cancelation on the incoming `Context` is used.

The code addresses (2) by updating the API endpoint to use a separate call path

for the API-triggered archive download. This avoids producing HTML-errors on

errors (it now produces JSON errors).

Signed-off-by: Peter Gardfjäll <peter.gardfjall.work@gmail.com>

The recovery, API, Web and package frameworks all create their own HTML

Renderers. This increases the memory requirements of Gitea

unnecessarily with duplicate templates being kept in memory.

Further the reloading framework in dev mode for these involves locking

and recompiling all of the templates on each load. This will potentially

hide concurrency issues and it is inefficient.

This PR stores the templates renderer in the context and stores this

context in the NormalRoutes, it then creates a fsnotify.Watcher

framework to watch files.

The watching framework is then extended to the mailer templates which

were previously not being reloaded in dev.

Then the locales are simplified to a similar structure.

Fix #20210

Fix #20211

Fix #20217

Signed-off-by: Andrew Thornton <art27@cantab.net>